By OpenAI's own testing,Arnold Reyes Archives its newest reasoning models, o3 and o4-mini, hallucinate significantly higher than o1.

First reported by TechCrunch, OpenAI's system card detailed the PersonQA evaluation results, designed to test for hallucinations. From the results of this evaluation, o3's hallucination rate is 33 percent, and o4-mini's hallucination rate is 48 percent — almost half of the time. By comparison, o1's hallucination rate is 16 percent, meaning o3 hallucinated about twice as often.

SEE ALSO: All the AI news of the week: ChatGPT debuts o3 and o4-mini, Gemini talks to dolphinsThe system card noted how o3 "tends to make more claims overall, leading to more accurate claims as well as more inaccurate/hallucinated claims." But OpenAI doesn't know the underlying cause, simply saying, "More research is needed to understand the cause of this result."

OpenAI's reasoning models are billed as more accurate than its non-reasoning models like GPT-4o and GPT-4.5 because they use more computation to "spend more time thinking before they respond," as described in the o1 announcement. Rather than largely relying on stochastic methods to provide an answer, the o-series models are trained to "refine their thinking process, try different strategies, and recognize their mistakes."

However, the system card for GPT-4.5, which was released in February, shows a 19 percent hallucination rate on the PersonQA evaluation. The same card also compares it to GPT-4o, which had a 30 percent hallucination rate.

In a statement to Mashable, an OpenAI spokesperson said, “Addressing hallucinations across all our models is an ongoing area of research, and we’re continually working to improve their accuracy and reliability.”

Evaluation benchmarks are tricky. They can be subjective, especially if developed in-house, and research has found flaws in their datasets and even how they evaluate models.

Plus, some rely on different benchmarks and methods to test accuracy and hallucinations. HuggingFace's hallucination benchmark evaluates models on the "occurrence of hallucinations in generated summaries" from around 1,000 public documents and found much lower hallucination rates across the board for major models on the market than OpenAI's evaluations. GPT-4o scored 1.5 percent, GPT-4.5 preview 1.2 percent, and o3-mini-high with reasoning scored 0.8 percent. It's worth noting o3 and o4-mini weren't included in the current leaderboard.

That's all to say; even industry standard benchmarks make it difficult to assess hallucination rates.

Then there's the added complexity that models tend to be more accurate when tapping into web search to source their answers. But in order to use ChatGPT search, OpenAI shares data with third-party search providers, and Enterprise customers using OpenAI models internally might not be willing to expose their prompts to that.

Regardless, if OpenAI is saying their brand-new o3 and o4-mini models hallucinate higher than their non-reasoning models, that might be a problem for its users.

UPDATE: Apr. 21, 2025, 1:16 p.m. EDT This story has been updated with a statement from OpenAI.

Topics ChatGPT OpenAI

Best Max streaming deal: Save 20% on annual subscriptions

Best Max streaming deal: Save 20% on annual subscriptions

The most streamed TV shows of 2023 may surprise you

The most streamed TV shows of 2023 may surprise you

Early Spring Sketches by Yi Sang

Early Spring Sketches by Yi Sang

Dear Jean Pierre by David Wojnarowicz

Dear Jean Pierre by David Wojnarowicz

Best GoPro deal: Get the GoPro Max for under $400 at Amazon

Best GoPro deal: Get the GoPro Max for under $400 at Amazon

Best fitness deal: Get the Sunny Health & Fitness Mini Stepper for under $63

Best fitness deal: Get the Sunny Health & Fitness Mini Stepper for under $63

This 'GOT' star teamed up with Google to capture Greenland's melting ice

This 'GOT' star teamed up with Google to capture Greenland's melting ice

A worthless juicer and a Gipper-branded server

A worthless juicer and a Gipper-branded server

Sentences We Loved This Summer by The Paris Review

Sentences We Loved This Summer by The Paris Review

Waitin’ on the Student Debt Jubilee

Waitin’ on the Student Debt Jubilee

Best Garmin deals: Get 25% off Garmin Forerunner 745 plus more great deals

Best Garmin deals: Get 25% off Garmin Forerunner 745 plus more great deals

OpenAI comments on alleged ChatGPT private conversation leak

OpenAI comments on alleged ChatGPT private conversation leak

Ferocious blizzard smacks New York, but it'll be over sooner than you think

Ferocious blizzard smacks New York, but it'll be over sooner than you think

Elon Musk reveals the first passenger SpaceX will send around the moon

Elon Musk reveals the first passenger SpaceX will send around the moon

Google Maps images will be used to measure environmental damage in this city

Google Maps images will be used to measure environmental damage in this city

U.S. Senate bill tackles spread of nonconsensual AI deepfakes

U.S. Senate bill tackles spread of nonconsensual AI deepfakes

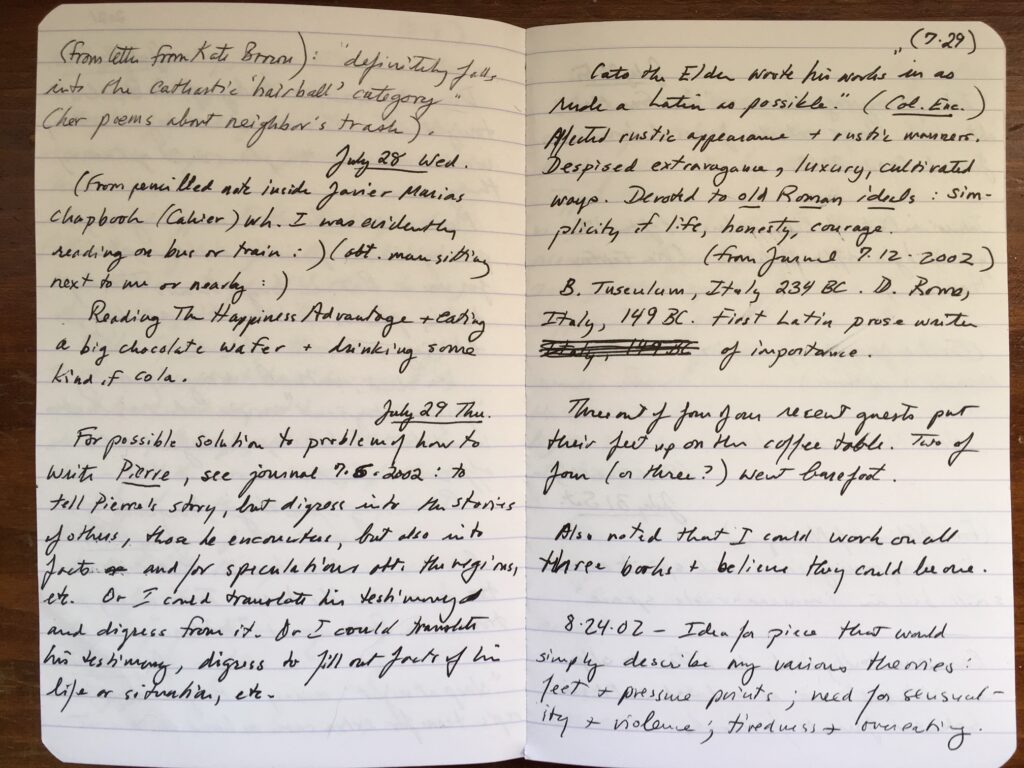

Diary, 2021 by Lydia Davis

Diary, 2021 by Lydia Davis

Playing Ball by Rachel B. Glaser

Playing Ball by Rachel B. Glaser

FAA issues new guidance to airlines on recalled Samsung Galaxy Note7You won't have to wait much longer to replace your recalled Note7College referee has to remind fans to please not shoot lasers onto the fieldBest Nintendo Switch 2 accessories: Hori Piranha cam, MicroSD Express cardsModels in hijabs make history at New York Fashion Week5th grader lays down the law for boy who has a crush on herLos Angeles police use a robot to take away a murder suspect's gunApple is releasing a beefy Smart Battery Case for iPhone 7Chicago officer indicted three years after firing on group of black teensBoy grew out his gorgeous mane to make wigs for cancer patientsSingaporeans still lined up for the iPhone 7, but queues were noticeably shorterHipsters queue for hours to order a drink from shotO, the horror! Ranking the Toronto Film Festival's Midnight Madness moviesLos Angeles police use a robot to take away a murder suspect's gunBoy grew out his gorgeous mane to make wigs for cancer patientsFAA issues new guidance to airlines on recalled Samsung Galaxy Note7The newest controversial Silicon Valley startup wants to buy a stake in your homeThe 5 'One Night in Karazhan' cards that completely change 'Hearthstone' playYou won't have to wait much longer to replace your recalled Note7Models strip naked for Augmented Reality fashion display in London Best early Prime Day deal: Save 20% on the Dyson Purifier Cool PC1 fan Temasek China chief says AI companies in the A AI Agents Explained: The Next Evolution in Artificial Intelligence ByteDance’s LLM team appoints new head from Douyin · TechNode Mazda forecasts “tough” competition to worsen near Global smartphone shipments fell by 11% in Q2 · TechNode China’s private space company LandSpace launches first liquid oxygen Top Alibaba exec Jiang Fan returns to key role three years after scandal · TechNode Alibaba’s logistic arm Cainiao launches next Li Auto reportedly shelves plan to build third plant due to regulatory hurdles · TechNode Alibaba’s intelligent services subsidiary launches new product to serve e Huawei plans a 5G smartphone comeback by the end of 2023 · TechNode Mitsubishi joint venture in China suspends production, plans job cuts · TechNode Tencent Games launches the Chinese version of Lost Ark · TechNode Meituan allows users to upload short videos as competition with Douyin heats up · TechNode TSMC sees revenue and profit decline in Q2 · TechNode Chinese chip startup Biren plans Hong Kong IPO this year · TechNode BYD starts hiring spree for battery plant in Hungary · TechNode Huawei’s Pangu large language model readies for commercial use in energy sector · TechNode Foxconn in talks with TSMC and TMH to build fabrication units in India · TechNode

2.3279s , 10133.2109375 kb

Copyright © 2025 Powered by 【Arnold Reyes Archives】,Miracle Information Network